I work with Microsoft DFS service for many years, and one of the scariest things about DFS was initial replication – tools to monitor and troubleshoot replication were not good or helpful enough and things often went south if there is something unusual in the file set (like extremely long file names with special signs). And time it takes to finish initial replication is also troublesome – it take days to finish up.

Recently, I got to setup another DFS from scratch, and initial seed was not something I was happy about. But ever since Windows Server 2012 R2 there are some changes with replication and DFS.

Before we begin

Be sure to test this one out first. When doing this in production make sure you have solid backup solution in place before you start messing with DFS.

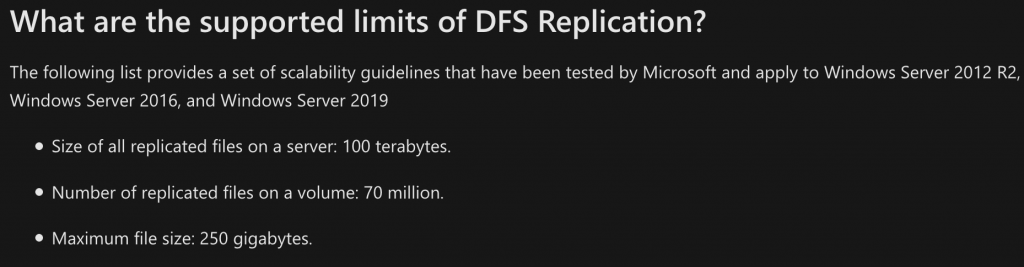

DFS had significant upgrades since Windows Server 2012 R2, so it is good option if you know limitations of the technology and still can utilize it.

Data Cloning and increased limits are huuuge upgrades for DFS.

Here is a good FAQ for DFS – https://docs.microsoft.com/en-us/windows-server/storage/dfs-replication/dfsr-faq

Thanks to Ned Pyle for great writeup on this topic, his writeup is inspiration for this article.

Prerequisites

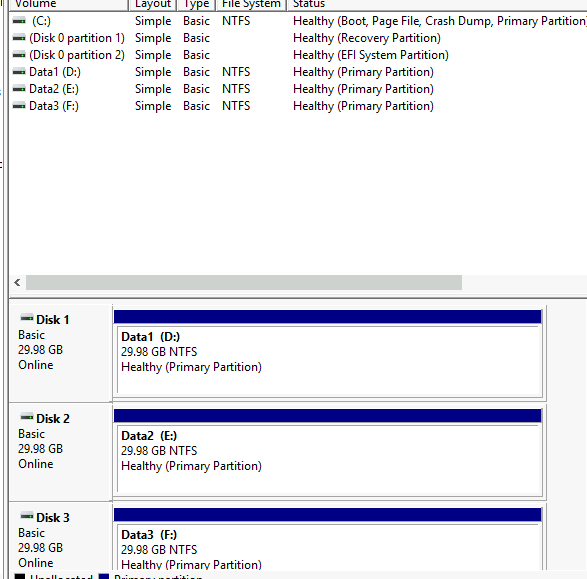

Simple enough – I have domain, with a domain controller and two DFS servers TDFS1 and TDFS2 to test my setup with.

If you have really large DFS repos, I suggest you pump up your VMs with hardware (many CPU cores and as much RAM as you can spare).

Each DFS VM has three additional drives besides system one, with 30GB in size. This is so we can test out properly DFS replication.

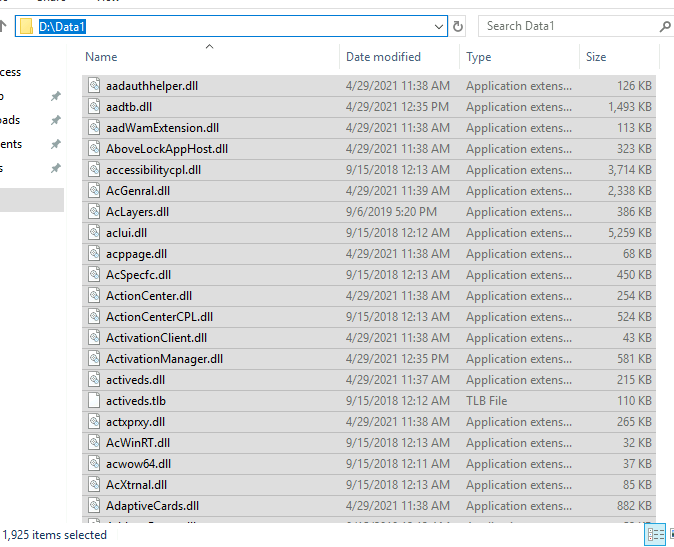

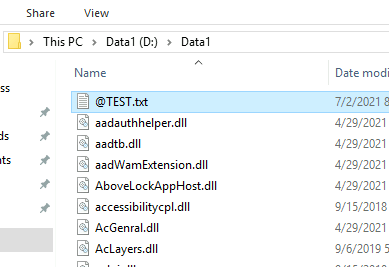

On TDFS1 server on D drive we are going to create folder named D:\Data1

I filled that folder with 1925 files I copied from C:\Windows\SysWow64

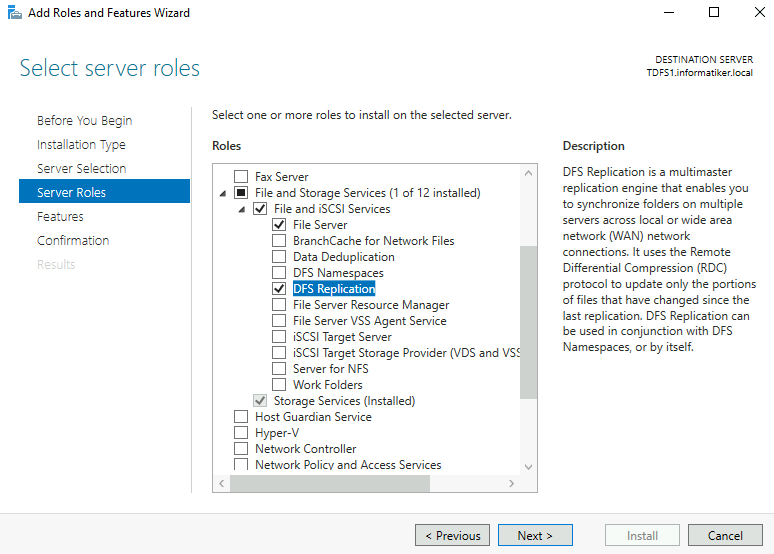

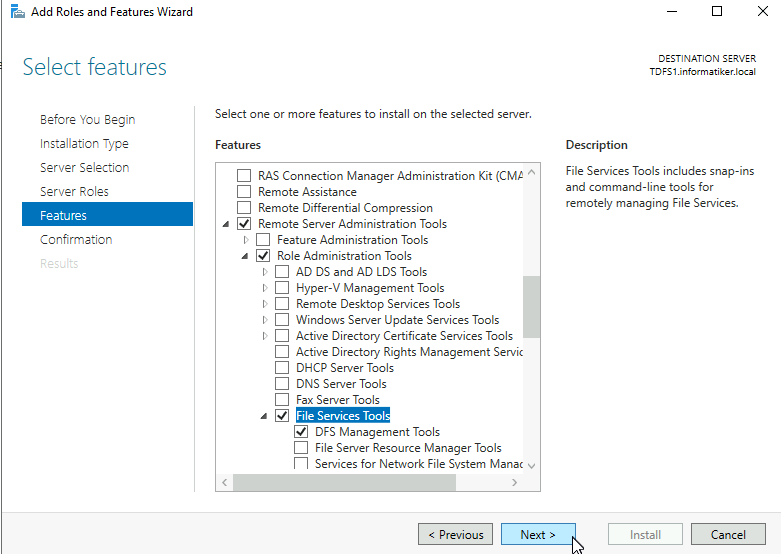

Add following Roles to both TDFS1 and TDFS2 servers

DFS Replication

Remote Server Administration Tools

Ok, we have all we need to proceed.

Last, but not least, run POWERSHELL WITH ELEVATED RIGHTS (as admin) through out this guide.

Clone DFS Database

It is important that we start this only on TDFS1, do not connect TDFS2 into this story in the beginning. If we do that we will start classic replication, and we do not want that.

Again, we are going first to configure TDFS1 where the data is. Do not connect TDFS1 with TDFS2 with any services!

One more important thing – if the folder and data that you used were already in DFS Replication, don’t even start this first scroll down to – CLEAN OLD DFSR DATA part of the guide.

On TDFS1 start elevated powershell (as admin) and type in following

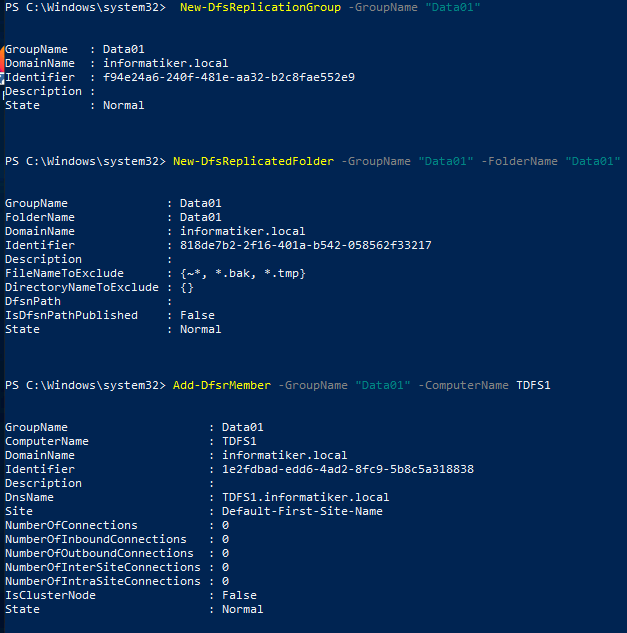

We are going to create new replication group named Data01

New-DfsReplicationGroup -GroupName "Data01" We are also going to add Data1 folder to it

New-DfsReplicatedFolder -GroupName "Data01" -FolderName "Data01" We are now going to add TDFS1 server to the Data01 replication group

Add-DfsrMember -GroupName "Data01" -ComputerName TDFS1 and point this group to location of the folder and make server TDFS1 primary member of the replication group.

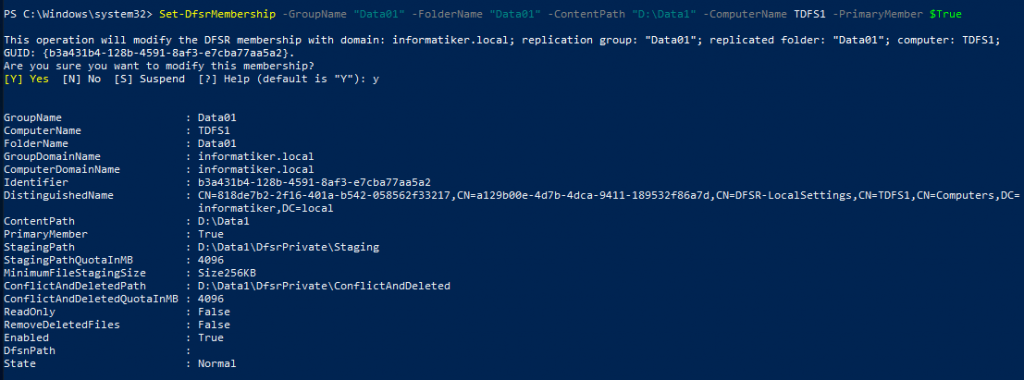

Set-DfsrMembership -GroupName "Data01" -FolderName "Data01" -ContentPath "D:\Data1" -ComputerName TDFS1 -PrimaryMember $True Update config/AD

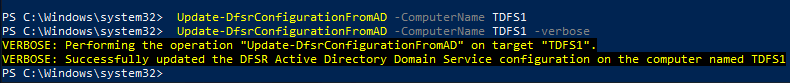

Update-DfsrConfigurationFromAD –ComputerName TDFS1

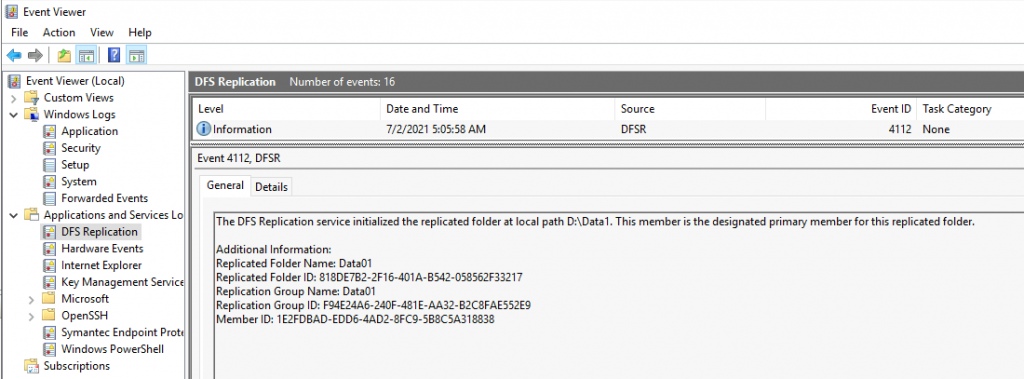

When event 4112 shows in DFS Replication log, we are clear to go further. This may take few minutes, wait and be patient and watch for log events.

If you stuck here with Event ID 6404 scroll down to CLEAN OLD DFSR DATA.

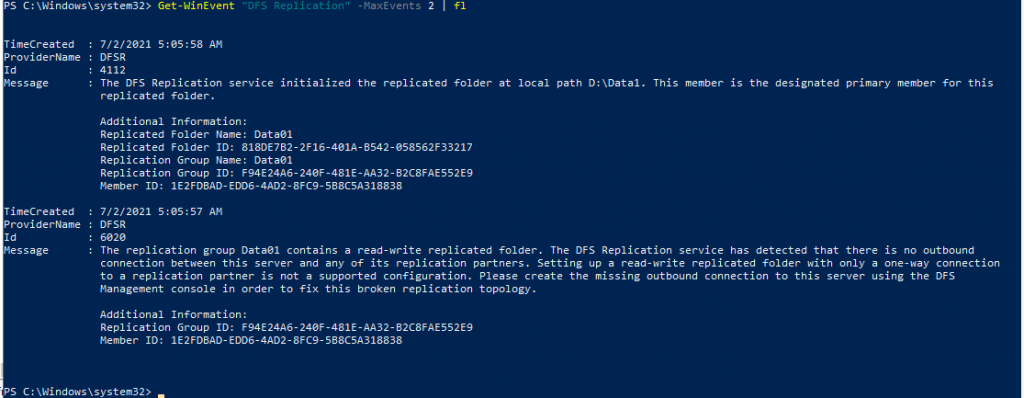

You can also do that through Powershell by typing in

Get-WinEvent "DFS Replication" -MaxEvents 2 | fl

Event 6020 is expected in cloning scenario, nothing to be feared of.

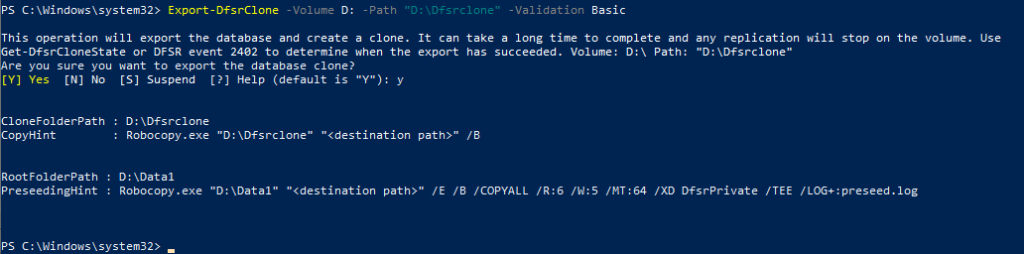

Ok, we are now going to export cloned database and XML for the D drive.

Export requires that output folder for the database and XML already exists (we will create one). Also, on D drive there shouldn’t be any sync or initial build in process. Again, make sure that there is no data movement on the drive/folder we just put into DFS.

It is also extremely important that no users add, edit, update files during this process, that is especially valid for the downstream server!!

New-Item -Path "D:\Dfsrclone" -Type Directory Here in this sample I turned on Validation to Basic. There are three levels of validation. None, Basic and Full.

None – no validation of files on source or destination server. Requires perfect preseed data.

Basic – recommended by Microsoft. Each file’s existing database record is updated with a hash of the ACL, the file size, and he last modified time. Good mix of fidelity and performance.

Full – Same hashing as with DFSR in normal operation . Not recommended for shares larger that 10TB.

Export-DfsrClone -Volume D: -Path "D:\Dfsrclone" -Validation BasicAgain, modification to the destination server (TDFS2) should not be done – no writing of files, folders to this server! It is also highly advisable to not use TDFS1 while this operation is ongoing. It can be used, but best be that it also isn’t while this is done.

Notice PreseedingHint – it is command that can be used for transfer to destination server.

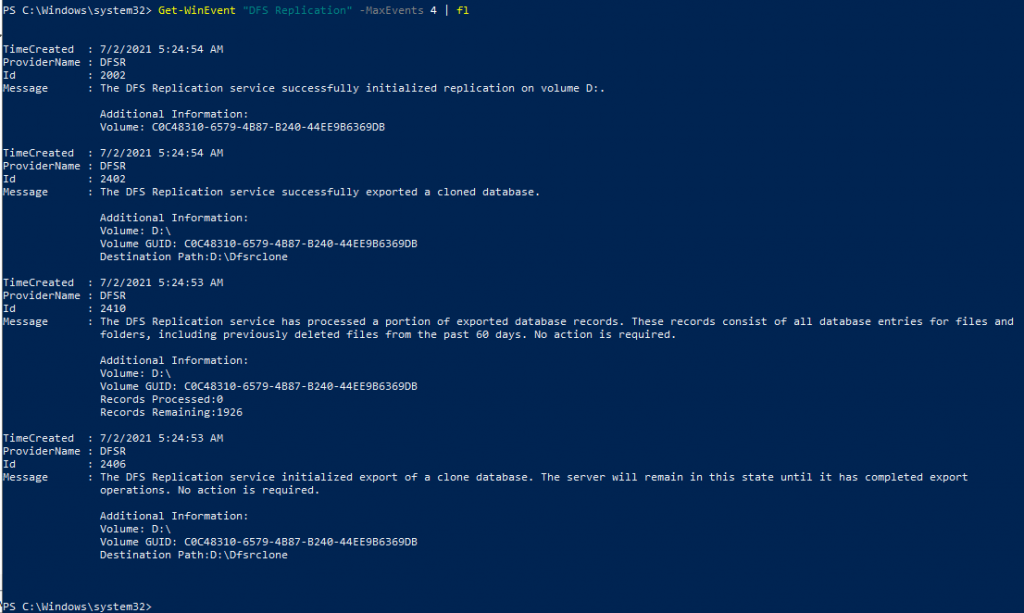

Let’s take a look at Event Viewer – we are expecting event 2402 which would indicate great success. It took 15 minutes with my dataset to get event 2402. There was a more than few 2410 events and then 2402 (this is in scenario with large quantity of files).

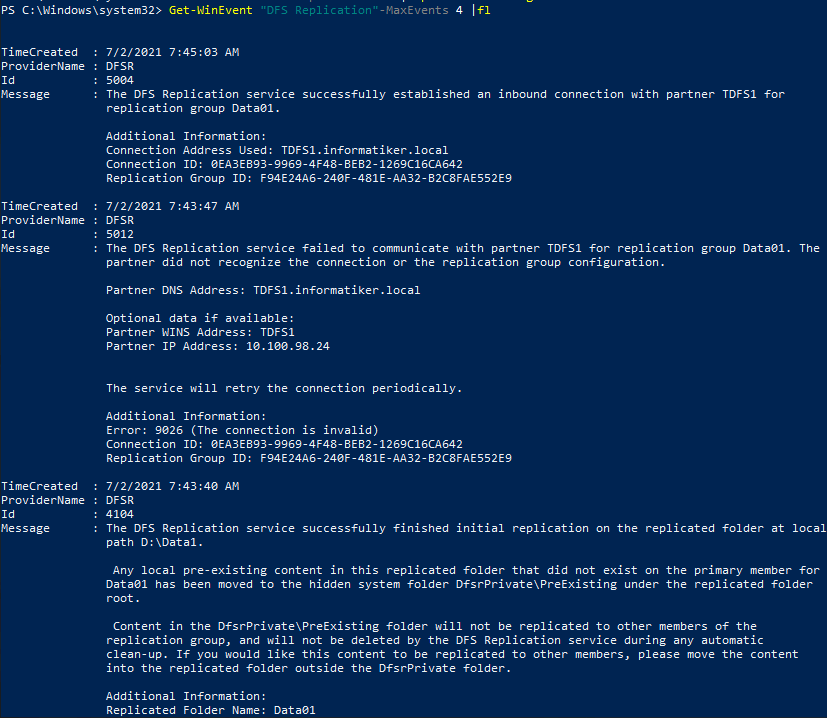

Get-WinEvent "DFS Replication" -MaxEvents 4 | fl

2406 and 2410 are process indicators and 2402 and 2002 is indicator the everything is back to normal. Ok, we can proceed.

Preseed to the Destination machine (TDFS)

There should be no files, folders or database on the machine we are going to clone to (TDFS2). Microsoft also recommends that no file shares are created until cloning is complete. Also, there should be no user access adding files, folders on the destination machine. Complete silence is the best course of action for the destination machine.

I mentioned before we got PreseedingHint command above, we are now going to use it, we will just fill in our destination server.

“\\TDFS2\D$\Data1” is my destination path.

Ok, let’s start this command on TDFS1

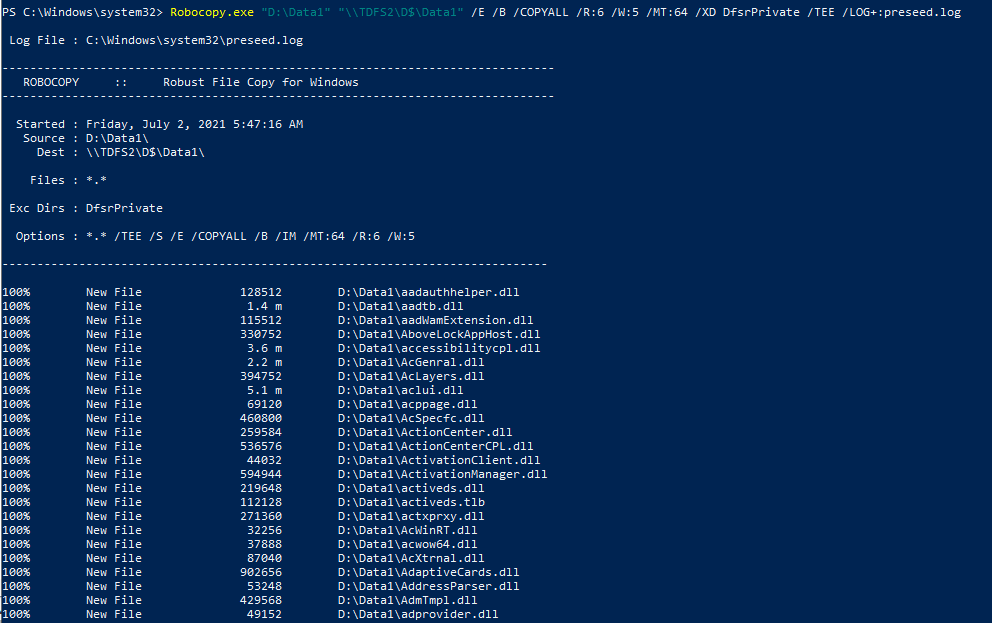

Robocopy.exe "D:\Data1" "\\TDFS2\D$\Data1" /E /B /COPYALL /R:6 /W:5 /MT:64 /XD DfsrPrivate /TEE /LOG+:preseed.log Let robocopy create all the folders, files and copy all the necessary things. Do not use robocopy /MIR command on the root do not create folders with robocopy or use robocopy to copy files you copied previously. Just use the command above without any messing with it. Robocopy is sensitive as it is, we don’t need to mess it more.

Copy was success

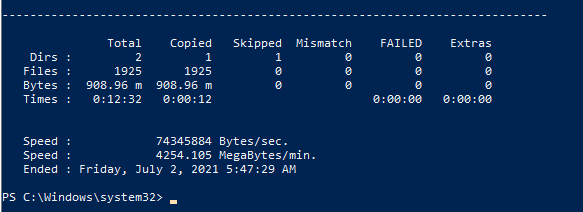

We are now also going to copy Dfsrclone folder with DB and XML we created earlier.

Robocopy.exe "D:\Dfsrclone" "\\TDFS2\D$\dfsrclone" /B

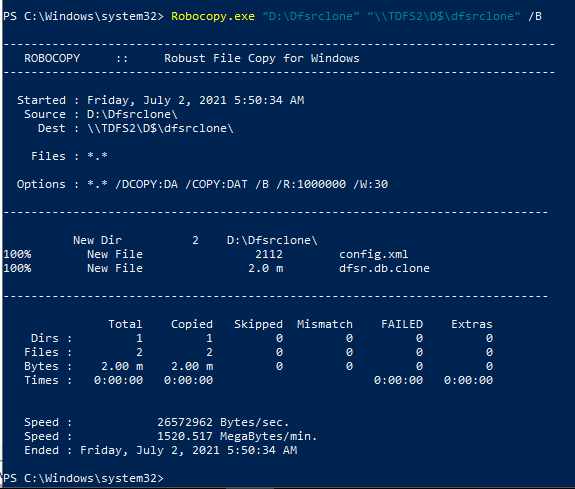

Before we go further, it is important that we compare some hashes to make sure we preseeded correctly to TDFS2 server.

Command below will show hashes from files that start with act

Get-DfsrFileHash \\TDFS1\D$\DATA1\act*

Get-DfsrFileHash \\TDFS2\D$\DATA1\act*

Check random datasets on your file server to confirm that everything checks out.

_____________________________________

CLEAN OLD DFSR DATA

Extremely important part, if the data you are planing to preseed was already in DFS and you wish to recreate DFS with same data set. For example, I took .VHDX with all my files and folders which is part of DFS, just attached it to a new VM, and wish to replicate to second completely new and clean VM. At the beginning of this process you will probably get 6404 error.

We need to ensure that DFSR folder does not exist on D drive on TDFS2.

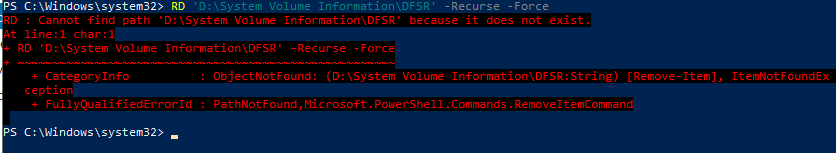

RD 'D:\System Volume Information\DFSR' –Recurse -Force

Everything is ok in my case, since VM is new, and these data were never previously replicated. BUT, in a real life scenario this command probably won’t work, if there is already System Volume Information\DFSR on your Volume you wish to Replicate.

If you have \System Volume Information\DFSR and data cannot be deleted completely – follow part below

But if you have situation where data was already replicated and you have \system volume information\DFSR and you cannot delete it completely because of errors, you can use this workaround.

Create empty folder on D drive. I will create “empty” folder. Folder is literally named – empty

Then run following command

robocopy D:\empty "D:\system volume information\dfsr" /MIR After robocopy command finishes, use above first RD command to delete the folder.

We are not yet out of the woods. This probably mean that data that you try to preseed was part of DFS replication already.

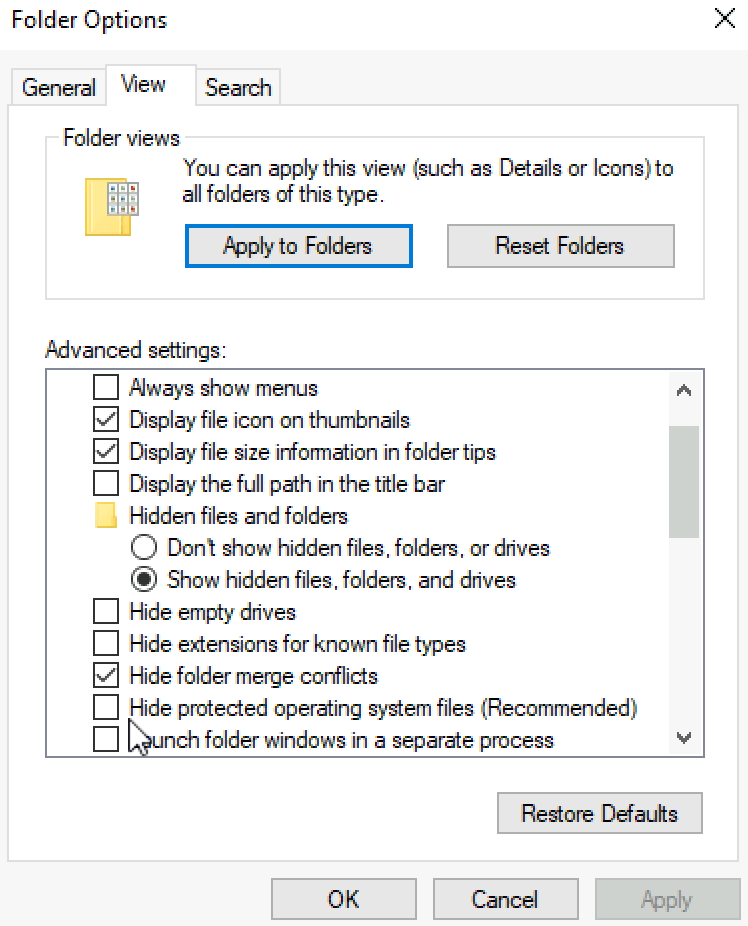

Go to File Explorer | View | Options | Change folder and search options | View tab – mark Show hidden files, folders and drives and also unmark Hide protected operating system files (you can hide them later again)

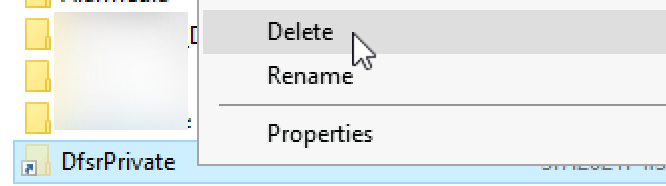

Go back to you volume/folder you wish to preseed and try to look for folder named DFSRPrivate and Delete it

Now you can go further, or try again recreating group, folder and adding server if you are stuck with 6404 event id in the beginning.

___________________________________________________

Ok, we will now import cloned database on TDFS2 (run command on TDFS2).

At the Import-DfsrClone I got error I could not pass easily – “Could not import the database clone for the volume D. Confirm that you are running in an elevated Powershell session, the DFSR is running, and you are member of local Administrators group…” My user is domain admin/local admin, I run Powershell as admin and DFSR service was running. I got pass this after I restarted DFS Replication service (DFSR).

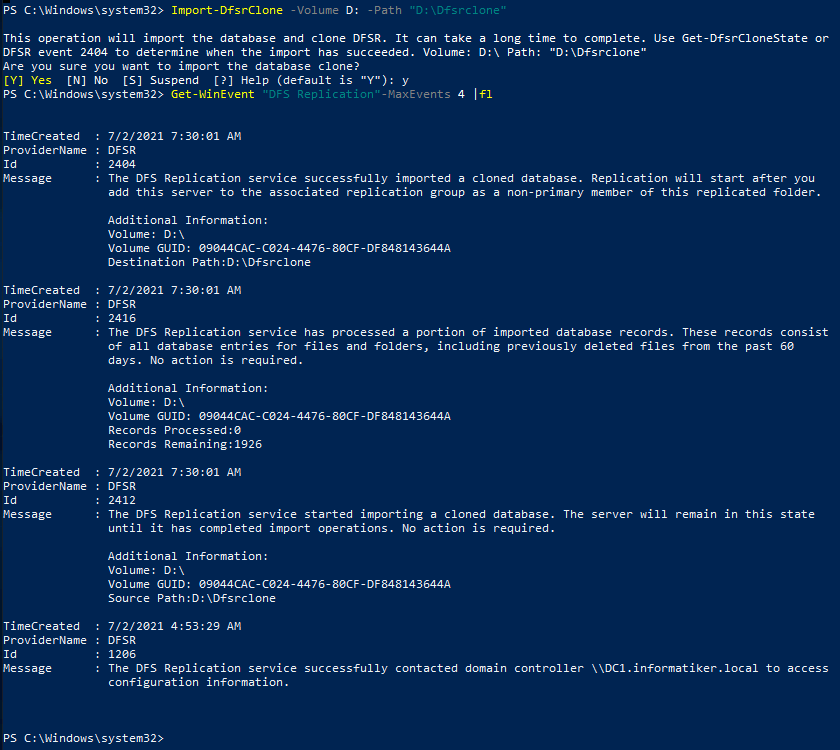

Import-DfsrClone -Volume D: -Path "D:\Dfsrclone" Ok, so we are now waiting for event 2404

Get-WinEvent "DFS Replication" -MaxEvents 4 | flAnd we have event 2404. 2416 and 2412 are process indicators.In real life with a lot of data this also took 10-15 minutes to get to event 2404.

Ok, time for our TDFS2 machine to join DFS replication

Join destination machine to DFS replication

We will now add TDFS2 machine to our already created Data01 DFS group.

I will run following two commands from TDFS1

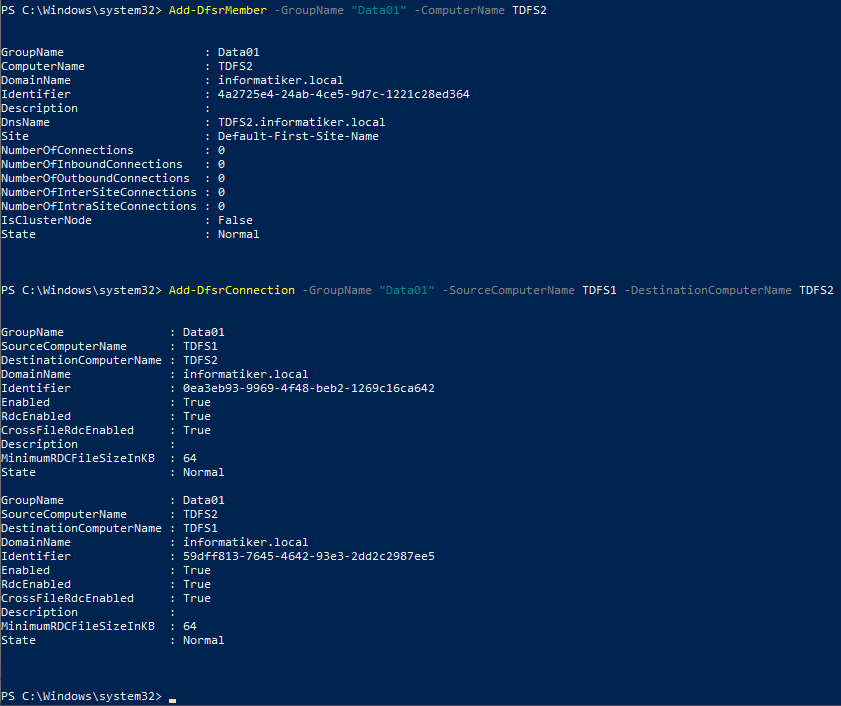

Add-DfsrMember -GroupName "Data01" -ComputerName TDFS2 We will now define TDFS1 machine as the source for DFS, and TDFS2 as destination.

Add-DfsrConnection -GroupName "Data01" -SourceComputerName TDFS1 -DestinationComputerName TDFS2

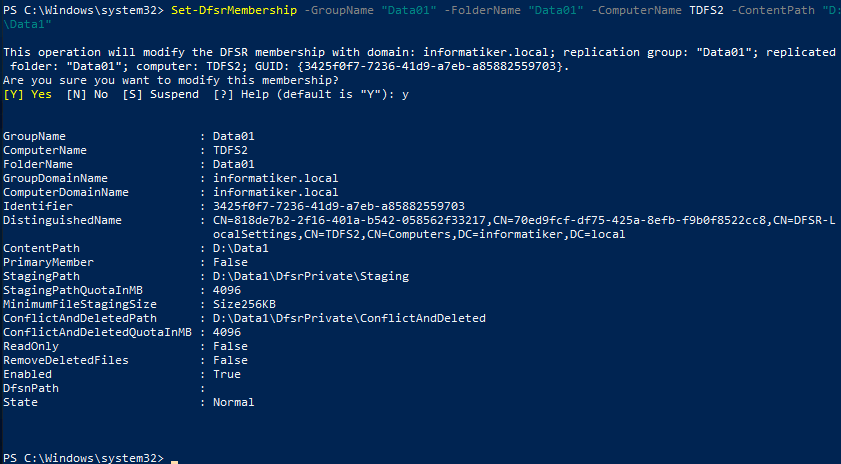

I will run following command from TDFS2

This will add D:\Data1 folder to already created group Data01 and Folder Data01 on DFS

Set-DfsrMembership -GroupName "Data01" -FolderName "Data01" -ComputerName TDFS2 -ContentPath "D:\Data1"

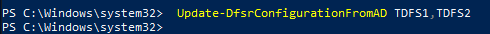

We will now update our configuration

Update-DfsrConfigurationFromAD TDFS1,TDFS2

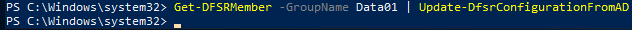

Or you can run extended command. Second command will pull all member from Data01 group, so you don’t have to write down every single server.

Get-DFSRMember -GroupName Data01 | Update-DfsrConfigurationFromAD

We now wait for Event 4104

Get-WinEvent "DFS Replication" -MaxEvents 4 | flWe have event 4014. There is also one 5012 and 5004, but with 5004, connections between nodes is established and everything is ok.

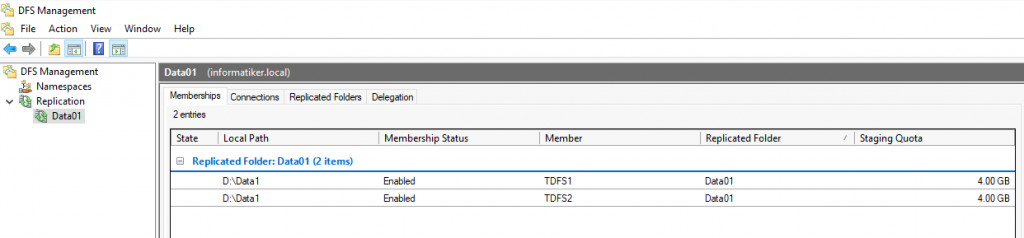

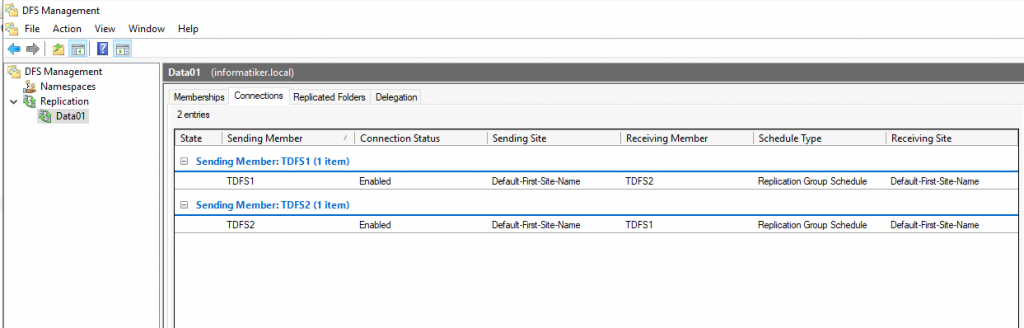

Since I very much like GUI, I will just take a peek into DFS Management

Looks fine

But is it fine?

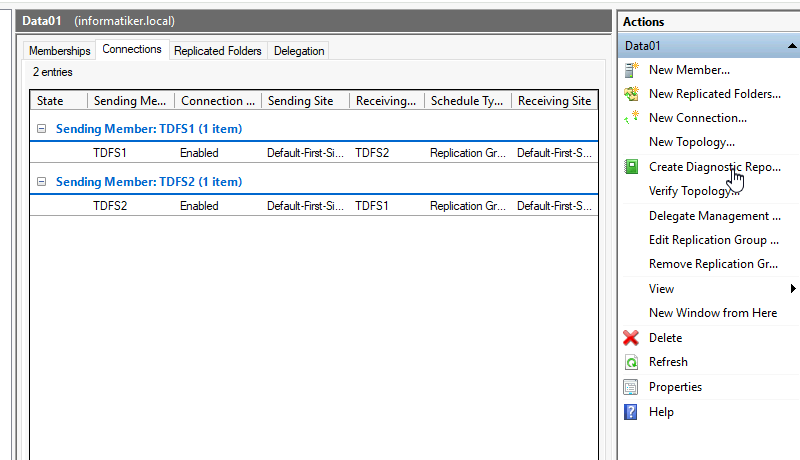

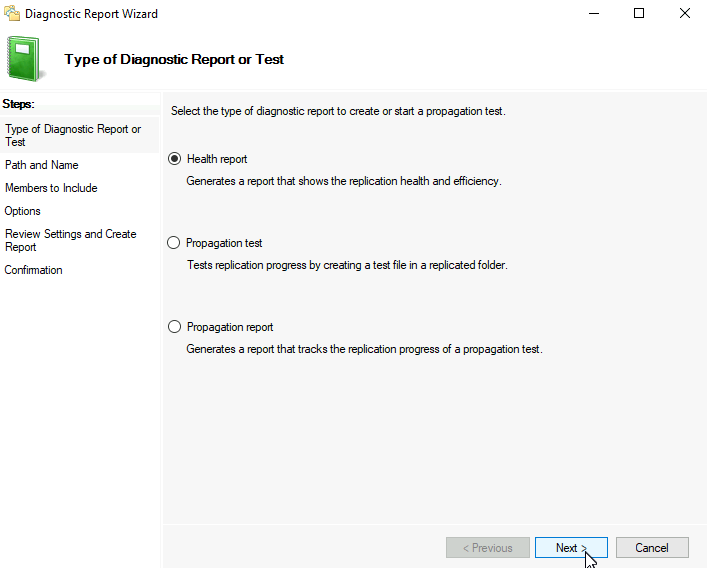

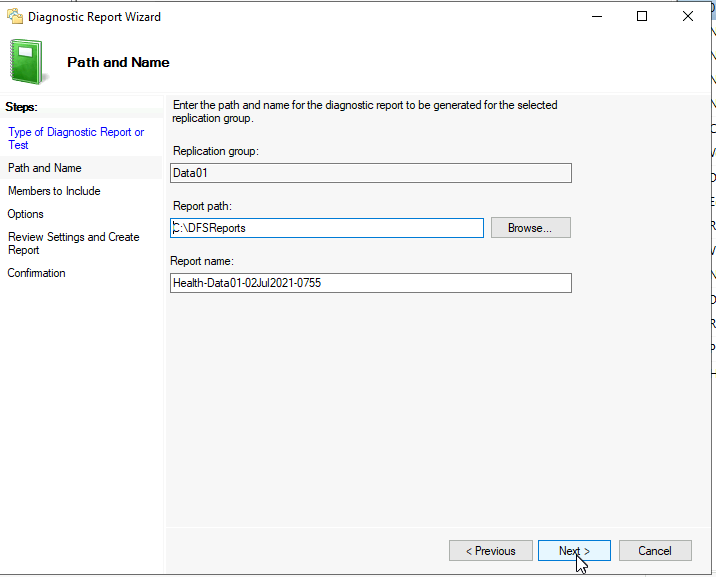

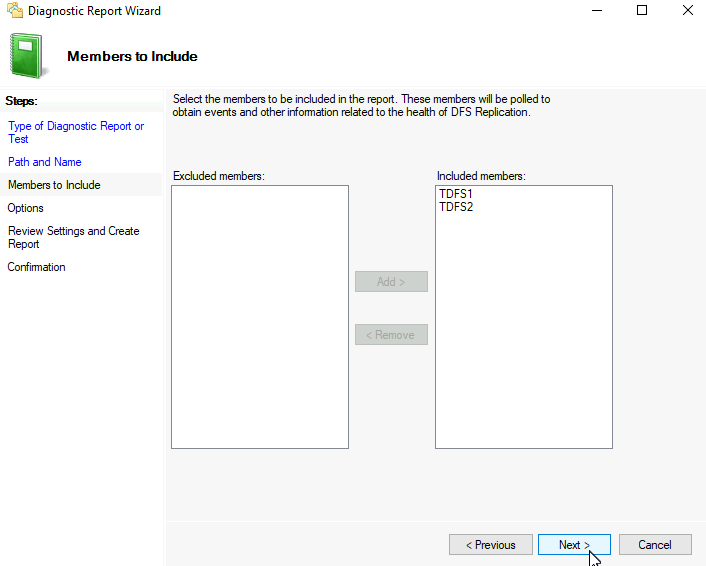

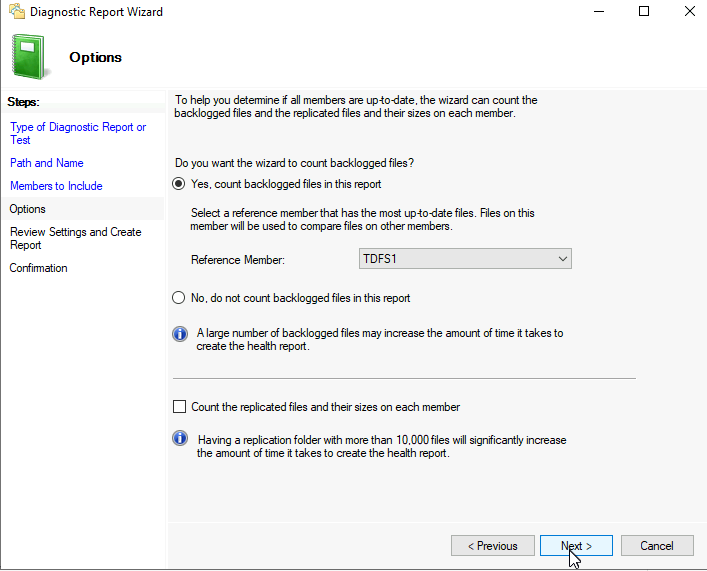

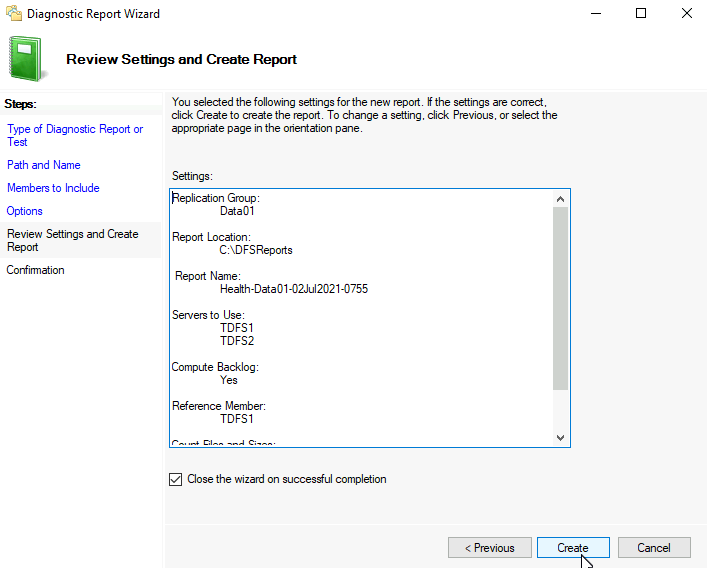

Let’s quickly create diagnostic report from DFS Management

This time I will create Health report, but Propagation can also be tested and report created from here.

…

…

…

Create

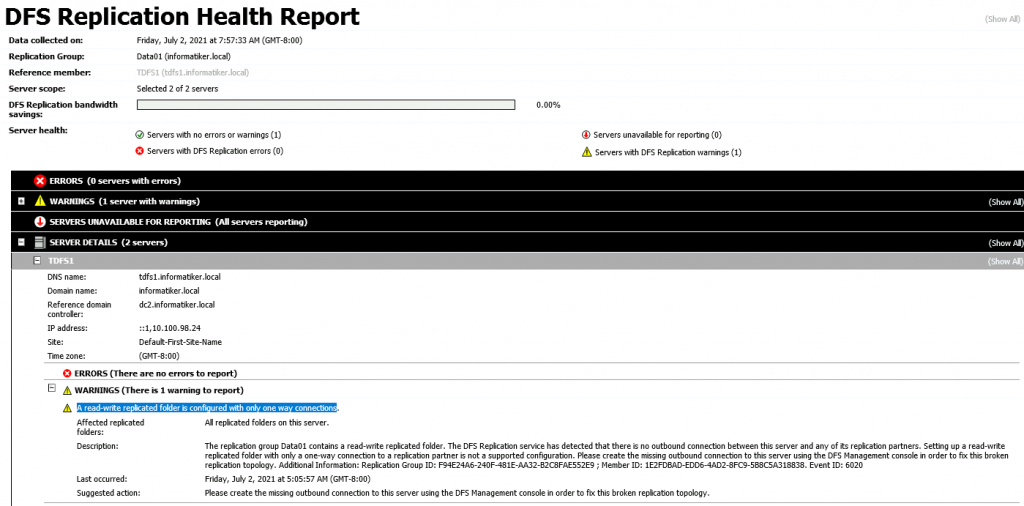

Report should be without errors. I got basically error 6020 which we got at the beginning of this scenario, so you can simply ignore it because we now have two way replication.

Testing

I tested this by simply creating/editing/deleting files both on TDFS1 and TDFS2 – replication was going fine and it was instant.

Also, my logs are clean and without errors. We can say we succeeded with this one.

If you have some troubles and errors with this, please look up the link I posted at the beginning of this guide, man behind that article is expert in DFS field :)

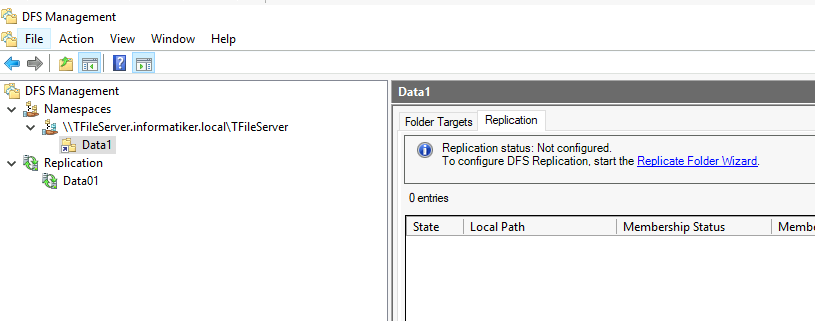

I also tried scenario in which I after this initial step brought up Namespace cluster.

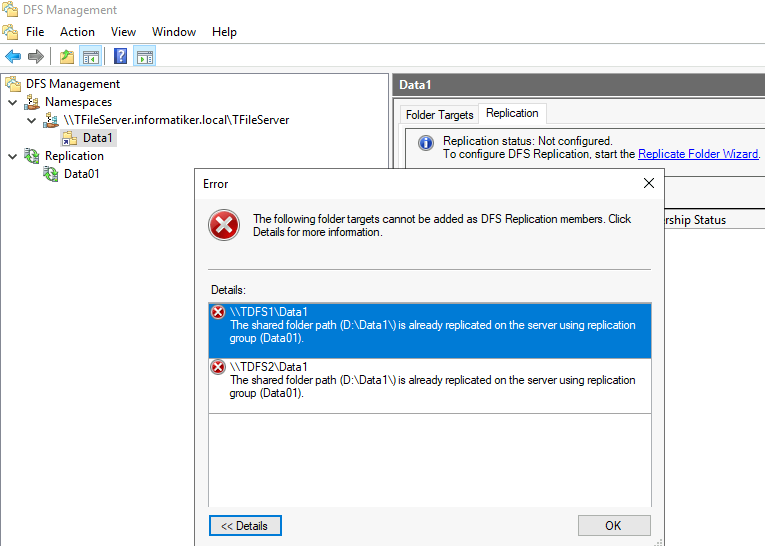

I enabled file sharing on both Data folders on TDFS1 and TDFS2 and added folder to namespace. In replication tab you can see that Replication status is “Not Configured”

If I start “Replicate Folder Wizard” I get following “error” – The shared folder path… is already replicated on the server using replication group.

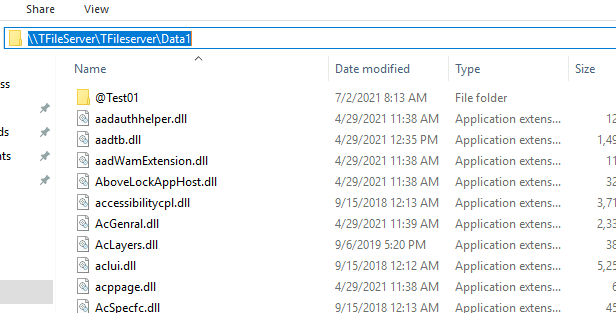

From client machine in the same domain I can access namespace folder share

Replication also works fine, if I create/delete/edit file on client or some of the DFS servers. It is now all matter of user rights.

So, I would conclude that this works despite little glitch in Namespace Replication tab. Namespaces and Replication are different services and this should be fine.

Conclusion

If you have DFS in your life and you have to migrate it to new machines, new hardware, this is something you just have to try.

I haven’t done DFS replications larger than 5TB a milion or two files, but Mr.Ned Pyle did this with more than 14milion files and maps larger than 10TB, and it cut down initial seeding from 24 days to a little more than 7 hours in fastest Validation scenario (None selected).

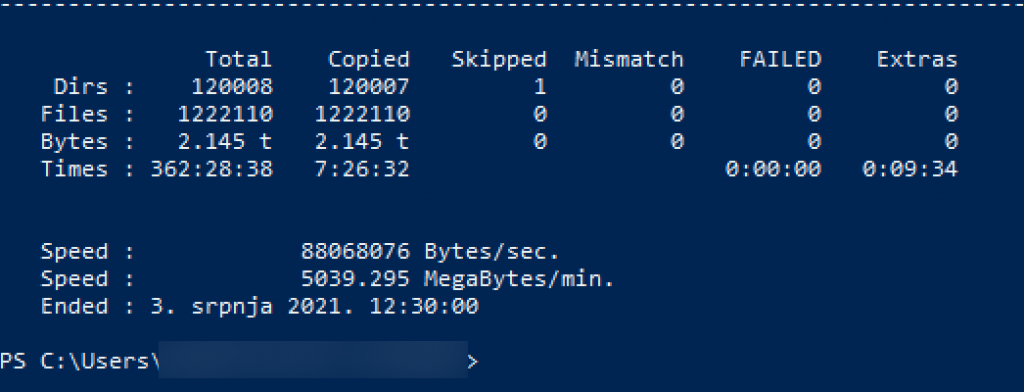

Here is my example with 2.1TB and 1.2 million files. With Basic validation it took 7:26hours to complete. This is huuuugeee difference – we are literally talking 3-4 days (depending on your setup) for this scenario in classic seeding.

So, if you want to spare yourself some time try this. I was looking for this feature since times of WS 2003/2008. I remember even just bluntly copying files from one DFS node to another and then starting replication – result was complete mess and corruption. This is very welcome update for DFS.

This method can be tricky, but is worth trying.